Will AR lenses ever work?

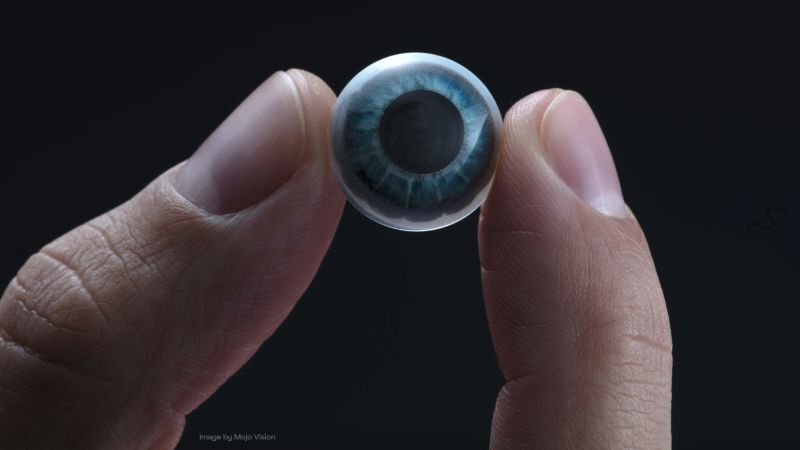

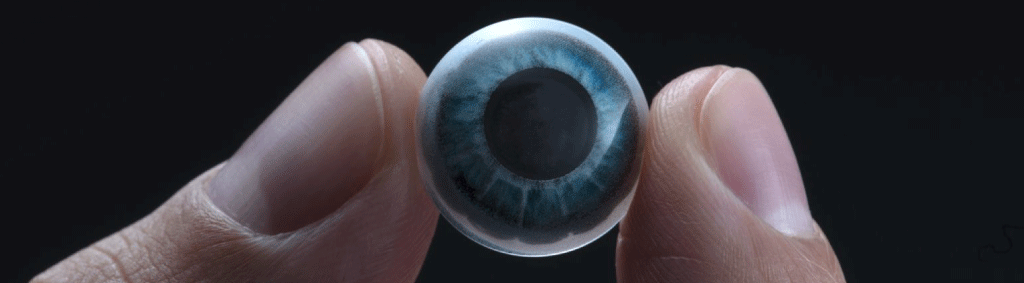

Everything seems possible with today’s technology. So, how about contact lenses that are able to display, overlay, and “augment” what we see? Shouldn’t be a big issue, right? The first things that come to mind about AR lenses will probably range from “crazy” to “cool” to “that’s physically impossible!”. And to some extent, they are all true. First related patents date back to 1999. A single-LED prototype was presented in 2009 by the then-future inventor of Google Glass. And in the past two years, we stumble over articles about a company called “Mojo Vision”, which has gathered investor funds of over $100 million already. In this article, we want to take a closer look at the most important challenges that a technology like this needs to tackle and solve first before even thinking about commercializing such a product or hoping for wide-spread customer acceptance.

Let’s Answer the Question by Asking More Questions

Be warned: this article will pose many questions. For most of these questions, there simply aren’t any answers today. But let’s not despair, it is a vastly fascinating and interesting topic and could potentially cause a huge leap for the development of hardware and software in the AR space.

Challenges for useful and functional AR contact lenses arise from a wide variety of topics:

- Optics: How can the image be focused at all? Won’t the display block your actual view?

- Necessary components: Actual display, power supply, CPU and memory, data transfer, solving heat dissipation, …

- Miniaturization: How to fit all components inside a thin and necessarily transparent gadget.

- Eye tracking, moving reference frame: A display on the eyes is an entirely different concept compared to any other AR display.

- Simple/text augmentation: Human beings read text by moving their eyes — but a contact lens display will be fixed!

- “True” augmentation: Real-world and object recognition/annotation requires a camera or similar sensor.

- Interaction: How can users control/change what they see with just their eyes?

- Stereoscopic view for both eyes: Poses extreme constraints on positional precision.

- Safety: Protection from brightness/malfunction, toxicity of materials, oxygenation of eyes.

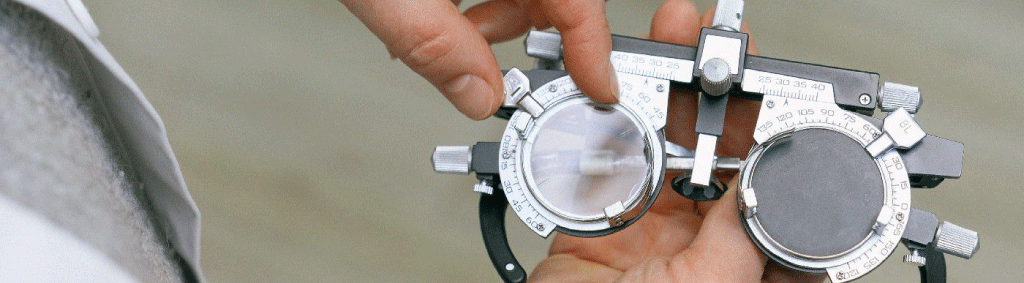

- Individualization: Needs individual prescription (micro-)lenses.

- Longevity vs. price: How long do ordinary contact lenses last? Will users of AR lenses be required to buy new ones every few months?

- Privacy/Security: Spying and eavesdropping concerns, data security of wireless transfers.

Optics — Keeping it Real

The most obvious problem when trying to focus something like a display located on a contact lens is that it is not possible. Period. An object on (or very close to) the surface of the lens simply cannot be in focus and cannot even form a real image — only a virtual one. In addition, the human eye itself cannot focus on anything closer than about 10 cm, and it is unable to put more distant objects into focus at the same time. Therefore, each pixel (or small set of pixels) of such a display requires a precisely tuned and positioned microlens or microlens array, to correct the optical path, and allow for a focused image on the retina.

However, at these orders of magnitude (=approx. 1 mm from the cornea), even small deviations from that alignment (such as distance from contact lens to cornea, due to varying amount of tear fluid, loose fit, eye movements etc.) can quickly result in a blurry image on the retina, as well as distortions of perceived distance and size of the image. In short, that’s not good.

The next problem is the occlusion of the real world by placing something in the optical path: No light from the real world will reach the retina where it is blocked by the display and its microlens arrays! While in the ideal case (i.e. the occluding object is on or very close to the principal plane of the lens), the result would simply be a slight decrease in brightness — in reality, a display inside a contact lens is actually placed at such a large distance from the center of the lens that it is a significant fraction (about 30–40%) of the eye’s focal length. So, anything placed in the optical path will produce visible artifacts like diffraction fringes, localized brightness reduction etc. (especially for very small, daylight-adapted pupils), blurring of sharp contours, as well as noticeable light and shade streaks (try it yourself: squint your eyes and look through your eyelashes). This is particularly detrimental if the display is placed on the optical axis, focusing on the fovea, the area of sharpest vision.

And what about where the image is actually seen, and how big it will be? The microlens array will need to precisely “spread out” the pixels, instead of focusing it all on the same microscopic dot on the retina, resulting in an “image” without dimension. Similarly, there will be no augmentation in the peripheral field, unless the microlenses “project” some lower resolution pixels at an angle into the eye.

Necessary Components

What about all the necessary hardware components which need to be either embedded in the contact lens itself, or on an external, additional gadget that the user needs to wear close to his eyes? For an autonomous and self-contained embedded AR contact lens, you would need a lot of components — essentially a complete computer setup:

- The actual display itself, including the microlens array

- Power supply/storage

- ARM-type CPU

- Memory

- Periphery for data transfer, internet

- Eye tracking system (gyro/accelerometer)

- Real-world tracking system (camera)

- Interaction (gesture recognition)

And yes, we are still talking about something as small as a contact lens. Remember how people say it’s incredible how much technology can be found inside a modern smartphone? Compare that to something small enough to fit on your fingertip and then tell us how likely that seems to you.

Miniaturization. Making Things Really, Really Small.

While we are at the topic of small objects, let’s make it even more complicated: How do we get a lot of components inside a transparent object? You need an even smaller form factor than is included in today’s smartphones and microelectronics.

Some parts you can probably hide behind an artificial iris — but this will impede your night vision (when the pupils are large) and your ability to see in a dark environment. But then again — that’s probably one of the reasons you would wear AR lenses in the first place, to improve your night vision… What about heat dissipation/absorption? You certainly do not want anything touching your eye that heats up to above 42°C. Like ever. So, safety measures need to be taken, especially for possible equipment malfunction. Even if all components are low power, heat will be generated, nonetheless. How will this heat dissipate? Is heat radiation and simple heat exchange with tear fluid enough? Somebody better figure that out before people burn their eyes.

Also, for a non-embedded version (which means one or several external gadgets that will reduce user acceptance immediately), everything needs to be transferred wirelessly: power via power induction (close to your eyes and brain, unless the battery is embedded in the lens), data via wireless transfer (close to your eyes and brain), even a full display feed/video stream (“remote display”).

Eye Tracking, Moving Reference Frame

The whole paradigm of how we look at something and how such an image needs to be presented is entirely different between an image on a contact lens, and any other existing display system. For photographs, monitors — in fact, anything in the real world, and even head-mounted displays for VR or AR — the images are essentially static, and it is the eyes that need to move and “scan” the image, to get a clear view of what is presented. Note that for HMDs, the image needs to rotate and move along with the head. However, head movements are significantly slower than eye movements, and the necessity for these image movements comes from “converting” the view alignment from a head-relative reference system to the “global” static reference system.

An image displayed on a contact lens needs to readjust to every single eye movement — and these eye movements, the so-called “saccades”, are essential for seeing. Saccades happen very frequently (several times per second) and they are extremely fast (several hundred degrees per second). Image display happens per definition in an eye-relative reference system, instead of the global real-world reference system. AR contact lens prototypes such as the ones presented at CES 2020 are currently oblivious to this essential distinction, as they are held in front of the eye, and therefore still exist in the real-world reference system. Technically, they do not represent a real AR contact lens that is compatible with our actual behavior and life.

There are, however, two physiological effects such a display device can take advantage of: one is the fact that it is always the same pixels that cover the same area on the retina (assuming the contact lens only moves within a few microns — which is actually unrealistic). This can be used to design the display hardware for true “foveated rendering”, meaning the pixel density associated with the fovea centralis can be much higher, and can be continuously decreased by several orders of magnitude for peripheral vision.

The other effect is called “saccadic masking”, which prevents the brain from processing visual input during and at the end of every eye movement. This can give the tracking system and image processor additional time before it actually needs to display an updated and correctly oriented image for each new eye position. Our brain is fast, but it’s not that fast. In this case, that can be benefit the technology.

Nonetheless, an eye tracking system is an absolute must-have. Without it, AR contact lenses are practically useless. Currently, external eye tracking systems are generally cumbersome, because they use a combination of one or two cameras, several near-infrared LEDs, and fast image processing, detecting the relative positions of both pupil and LED reflections. For an embedded eye tracking system, it is essentially sufficient to measure the eye’s rotation around each axis. Inertial sensors can be used for this, and current MEMS gyroscopes may be sufficiently small (and fast enough) for this task. However, all inertial sensors are prone to integration drift, so it will be difficult to sufficiently reproduce absolute eye orientation all the time, without additional sensors.

Even with precise tracking, small, unavoidable movements of the contact lens in relation to the eye (especially due to the high-velocity saccades) will be unavoidable. Classical contact lenses that use a translating bifocal design actually rely on this fact. These movements must be compensated somehow, or otherwise the perceived image will keep shifting around, and may even lose focus. If we are dependent on the information we are trying to see, that can be annoying as well as potentially dangerous.

Simple/Text Augmentation. Sounds Simpler than It Is.

The whole principle of reading relies on fast eye movements, the “saccades”. When looking at any text, only very few letters (let alone words) will be in focus at any time, as anything too far outside the optical axis will no longer be projected onto the fovea centralis. So effectively, only one or two words can be read at any time. You don’t believe it? Try it out: Look at any word in this article and try to read neighboring words, or the words above and below it, but without moving your eyes. You will be surprised how limited your view actually is!

And here comes the problem of a reference system again: in the real world, text is static, and we read it by quickly moving to the next word or group of letters, without even noticing. But when we want to display text information in the contact lens, that text will move along with the eye. We simply cannot use saccades to “see” other parts of the displayed information — unless the eye tracking updates the position of said information, making it once again static in the real world (as opposed to “always in the same place, no matter where we look”). Sounds complicated? That’s because it is. How is the lens supposed to distinguish whether to move the virtual text/displayed part (because it is annotating an object), and when to keep it in place relative to the eye (in which case you are limited to two or three words)?

“True” Augmentation

Finally, any form of “actual” augmentation of what we see (such as highlighting specific real-world objects, annotating or recognizing stationary and moving objects, overlaying road signs with language translation, etc.) will require sensory information from the environment. Usually this is done with an RGB or near-infrared camera, while specialized sensors might use depth sensors, laser ranging, ultrasound, or actual radar. Again, such an input sensor would have to be integrated into the lens itself — otherwise the user will be required to wear an additional external device.

And if the device is external, that sensor will be monoscopic, and more importantly, its position and view frustum will be different from what each contact lens “sees”. This will have to be compensated, in order to match any augmentation with what your eye actually sees. Another major problem is that for any so called “optical see-through” AR system (as opposed to “camera see-through”), it is only possible to overlay real-world things with something brighter, but not diminish the visibility of specific real-world objects. As a result, any augmentation will suffer from the typical “translucent”, ghostly appearance that all optical see-through AR glasses. You need an example? With the translation of road signs, it is not really possible to actually replace the original text, or even remove it — you can only add displayed content, and only if it is brighter than the actual real-world object. In addition, for occlusion of virtual objects and depth perception, an actual depth sensor is required — a simple camera input sensor will generally not suffice.

Interaction: How to Communicate with Your AR Lens

How will you control what is being displayed? How will you interact with it? How will you select menus and functions? Using just your eyes, your options are essentially limited to staring and blinking, and the system cannot distinguish between “I am just looking longer at that part of the image” and “I want to trigger a button or menu selection” — let alone “I am now actually looking at a real-world object, and don’t want to trigger a menu interaction at all.”

So again, you will need an external gadget or hand-held device (which is cumbersome), or hand gestures (requiring yet another motion sensor that needs to be integrated), or completely new eyes-only interaction metaphors that the user needs to learn first. And as the experience of the past shows, voice control has a very low acceptance by users (think Google Glass in public), and prevents you from actually utilizing your AR lenses in many situations where it’s supposed to be quiet (business meetings, cinema, church, …), while at the same time being adversely affected by environment noise (road traffic, live concerts, …). Also, it requires a microphone — yet another component added to your contact lens.

Stereoscopic View — Not for One Eye Only

Having only one contact lens that adds augmented content to your view can be irritating, especially when augmenting real-world objects. But putting an AR contact lens in each eye to allow for binocular vision and stereoscopic display of the augmented content places even more and extreme constraints on the positional precision of these lenses. The angular rotation, the “vergence” of the eyes for a depth position shift from say 1 m to 2 m is merely 0.9° per eye, corresponding to a shift of about 0.2 mm of a contact lens.

Now even so-called “retina displays” of current phones don’t offer more than 300–500 PPI (pixel-per-inch), although the first small-scale displays with +1,000 PPI already exist, and there are some monochrome prototypes that can even go up to 5,000–15,000 PPI. However, assuming a full-color display of 1,000 PPI at a size of for example 1 mm on the contact lens would have a resolution of merely 40x40 pixels, and the parallax error of 0.2 mm must be compensated by shifting the image by 9 pixels. Or, to put it another way: any error of even 1 pixel in such a device (or correspondingly, a shift of the contact lens’s position of 25 µm!) would already result in a depth perception error of about 5 cm at 1 m distance. Which means, any augmentation on top of the real world can easily wobble and shift positions. Not only sideways due to involuntary movements of the contact lens, but much more so in depth.

In addition, a stereoscopic view requires the exact eye position instead of just the rotation, either by yet another inertial sensor (in this case, an accelerometer), or by using the camera-based real-world tracking technology mentioned above.

Safety First

There are also safety issues to be considered, of course: building an AR lens for dark environments would help minimize power consumption and heat generation. But what if we want to use the lenses outside, on a normal day? Especially for clear skies, white surfaces can easily reach a brightness of well over 1000 cd/m2. To be able to see anything augmented on top of that would necessitate an equally powerful display. By the way, what if the equipment malfunctions, and pumps that full brightness to the center of your retina if you are back in a dark room and your eyes have already adapted to the dark? Remember, you cannot close your eyes to protect you from that — the contact lens sits below your eyelid…

Last but not least, what about toxicity of the involved materials, the electronics, or even an embedded battery? What about the standard issues of diminished eye oxygenation during prolonged wearing that even normal contact lenses already have? Imagine waking up after a night out with your AR contacts still in your eyes. It being unpleasant is probably the least of your worries.

Customizing Your Lens

As the necessary microlenses need to be finely attuned to the physical shape and parameters of your eye, they must also account for visual acuity correction, as well as the precise shape of your cornea. This means that each AR contact lens must be manufactured to the individual eye prescription parameters of each wearer, similarly to a prescription you get for your glasses. An additional problem is that people who wear corrective glasses (and who want to continue to do so), need individual contact lenses which ensure that only the microlens display is being adjusted and that they don’t correct/influence the regular field of view, as that has already been corrected by the glasses.

Longevity vs. Price

Due to the miniaturization and amount of technology that needs to be embedded in AR lenses and the necessary prescription individualization, there is no way AR lenses will come cheap. How long do you usually use a regular contact lens until you need to replace it? A few weeks? Months? Will you be willing to buy new AR contact lenses several times a year?

Privacy / Security and All that Jazz

And there will be the usual privacy, security, and ethical concerns — even more so for devices so small and inconspicuous as contact lenses. Many companies and institutions rely on data security and don’t allow any camera or recording devices on their premises. How will this be controlled? Do you need eye inspections and mandatory contact lens removal before entering such places? Especially if the lenses use some form of built-in image recognition for augmented content. What about EM interference in planes etc.? And if any wireless data is transferred to external devices, how will intercepting these data streams for malicious purposes be prevented? Or, the other way around: what if people can hack that data stream and send you very different images? Let’s say a white image at maximum brightness. Or insanely flickering, epilepsy triggering imagery, etc.

Making the Impossible Possible? Maybe One day

Many of these open issues can be solved. But for the majority of questions raised above, there currently aren’t any suitable answers and viable solutions. It is dangerous to start the hype now and promise the moon, when a decent, working solution that is acceptable to users is still at least 5 to 10 years away. On the other hand, the time from first research to a viable, commercial product is generally long, quite often about 20 years, even nowadays. Right about now would be a good time to dive into the fundamental research of such a technology as it does have a lot of potential.

Just not in the present.

And don’t expect to buy a contact lens on which you can watch 4k movies on Netflix, any time soon. Or displaying the nametags of people with whom you are in a meeting. Or doing internet searches while conversing with somebody. Or even reading a book. Investors and especially the media can be easily blinded by misleading and deliberately photoshopped marketing videos, and even misrepresentation of physical facts. It is therefore vital to think carefully about the problems such a technology brings with it. We do see possibilities to improve visual impairments beyond the usual function of regular contact lenses as well as supplementary, medical sensor input. These will most likely become the first usable devices — as opposed to a fully-augmented high-resolution AR lens that does not require any external gadgets.

Do you want to learn more about AR lenses?

Related links

“Some background info about Mojo Vision, and their envisioned technology.”